Introduction

Welcome to Securing 365, a space where I, Cody McLees, will share with you upcoming updates from Microsoft 365, best practices, and things to look out for in your own implementations.

In today’s segment, we will cover what to consider when adopting AI tools, specifically Microsoft Copilot, when you are using Microsoft 365.

1. Data Privacy and Compliance

There is a reason data privacy and compliance are listed as #1 here and that is because of the potential issues that could arise if you don’t properly adopt these tools. You need to fully understand the way that Copilot integrates with your data, how it handles that data, then make sure it aligns with the way you want to use the tool.

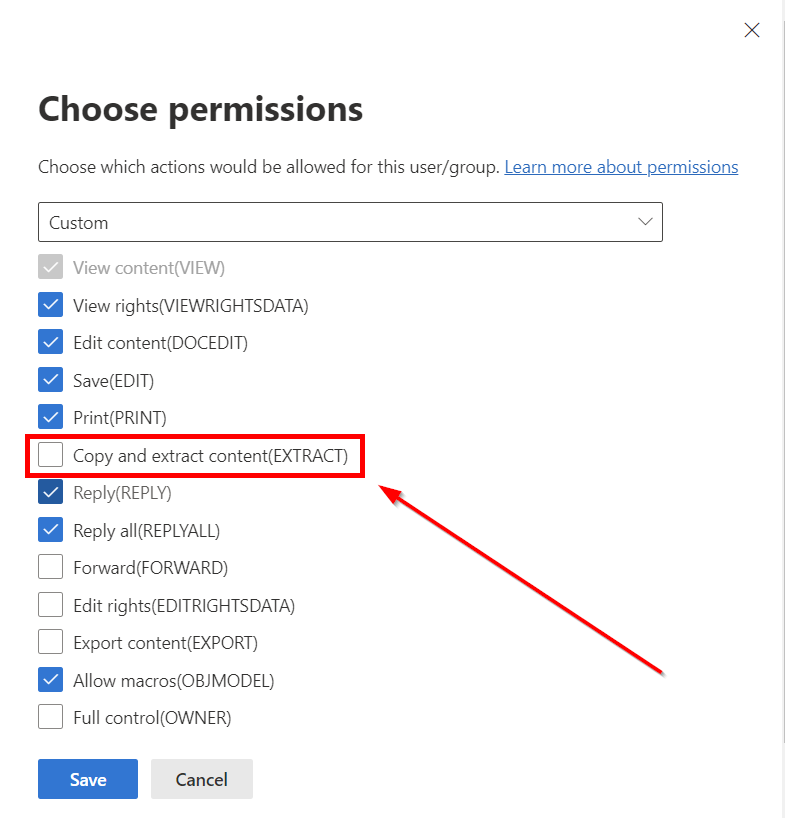

Once that is determined, you may have certain types of data that you do not want Copilot to interact with. If this is the case, Data Classification and Labeling will likely be your answer. The recommendation is to create a data classification taxonomy which details the different levels of protection your organization would adopt. With this, your technical team would implement your labels based on the paper policy developed. One (or many) of your labels may indicate to users that that data is not available or cannot be interacted with by Copilot. If this is the case, you should adjust your permissions on your label to exclude the “EXTRACT” permission. When you do this, you’ll want to detail in the description of the label and in your end user communications that files tagged with this classification will not be able to be interacted with by Copilot.

Now the last part of this is very important, but because I’m just a tech guy, I’ll give you the high level. Make sure your legal and compliance teams sign off and approve of the usage of Copilot in your environment. Remember those End User Acceptance agreements that no one read? Well, your legal team may want to review this one.

Key Steps

- Review the use and data handling policies from Microsoft for Copilot and make sure they align with your organization.

- Implement data classification and labeling in Microsoft Purview to control how sensitive information is accessed and processed.

- Engage your legal and compliance teams early to understand any potential data privacy risks and ensure your organization’s use of Copilot complies with relevant regulations like GDPR, HIPAA, or CCPA

2. Identity and Access Management (IAM)

The next obvious topic is Identity and Access Management. This is so much more important when you have an AI tool active because, when enabled, the threat actors that can potentially take over your user’s accounts will know to try using Copilot once they are in. Copilot will provide the threat actor with the same answers and will supercharge their attacks and their ability to get sensitive information out of your organization.

The biggest pieces here are re-evaluating your Conditional Access and Zero Trust methodologies. Make sure your users are doing MFA. Make sure you require compliant devices. Make sure your users have to register security info from trusted locations. The list goes on and on.

The next portion of this is checking your SharePoint online permissions. Do you have “everyone” permissions granted somewhere? Do you have a legacy topology of SharePoint that could have unintentional oversharing? If you don’t know, you may want to double check this. I’ve had many customers turn Copilot on, then have users surface information not relevant or not meant for their eyes just based off a simple query.

The last bit is around your user account hygiene. Are your users provisioned and termed appropriately? Are there accounts out there that haven’t been logged into in a long time? Who is approved to get a Copilot license? What would happen to your organization if a former employee still had access and was able to ask Copilot some sensitive questions?

Key Steps:

- Utilize multi-factor authentication for all users across all M365 workloads.

- Ensure users only have access to resources they need access to. Clean up permissions and remove SharePoint sites/libraries that do not need to be accessed anymore.

- Remove and clean up user objects and accounts for users who are terminated or accounts which are no longer used.

3. Identify Approved AI Apps, Block the rest

So now in this list, we have legal and compliance approval, Zero Trust Architecture is affirmed, so let’s let this AI tool loose. But wait, there are a ton of these tools, and we’ve only approved one, how should we handle this?

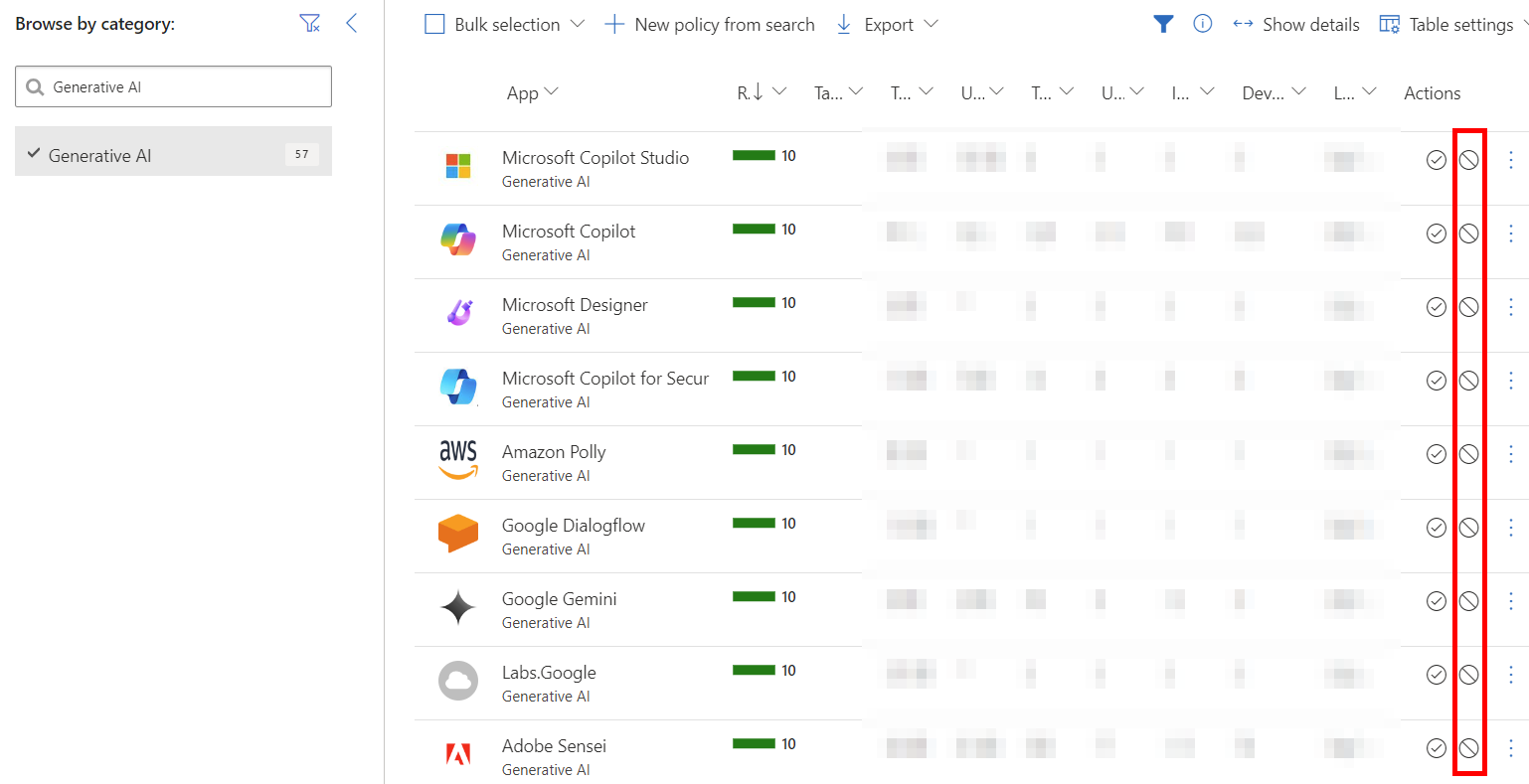

Consider onboarding your endpoints to Defender for Endpoint. From there, utilize Defender for Cloud Apps and mark all other AI tools (Chat GPT, Google Bard, etc.) as unsanctioned. This will flow down to your endpoints and list a URL in the web content filtering of Defender for Endpoint.

Marking the apps you select as unsanctioned will affect all devices onboarded to Defender for Endpoint, so keep in mind that this will be widespread. Also keep in mind that some of these applications may be relied upon by certain websites or tools and may not show themselves on the front end. If you have broken applications or sites after this, this would be the place to look!

Key Steps:

- Onboard devices to Defender for Endpoint, then utilize Defender for Cloud apps to detect the usage of other AI cloud apps.

- Determine your organizations approved list of AI apps, block the other apps by marking them as unsanctioned in Defender for Cloud Apps

4. Utilize Microsoft Purview for Complaint AI Usage

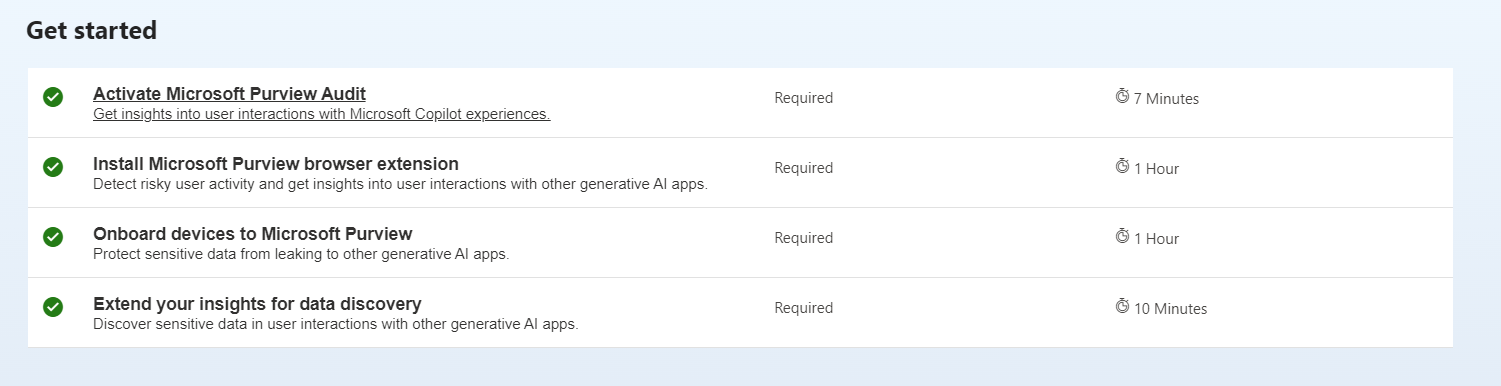

Microsoft has recently opened up the use of the AI Hub within Microsoft Purview. This is an excellent resource for organizations that want some very specific guidance on what to do to monitor the usage of these tools. AI Hub will make recommendations for very specific policies that you get enabled in your tenant within DLP, Communication Compliance, and Insider Risk Management.

Prerequisites

There are specific prerequisites that need to occur in your tenant for the AI Hub to work properly.

Activate Microsoft Purview Audit – this is completed by default and should be checked for you already.

Install Microsoft Purview Browser Extension – https://learn.microsoft.com/en-us/purview/dlp-chrome-get-started

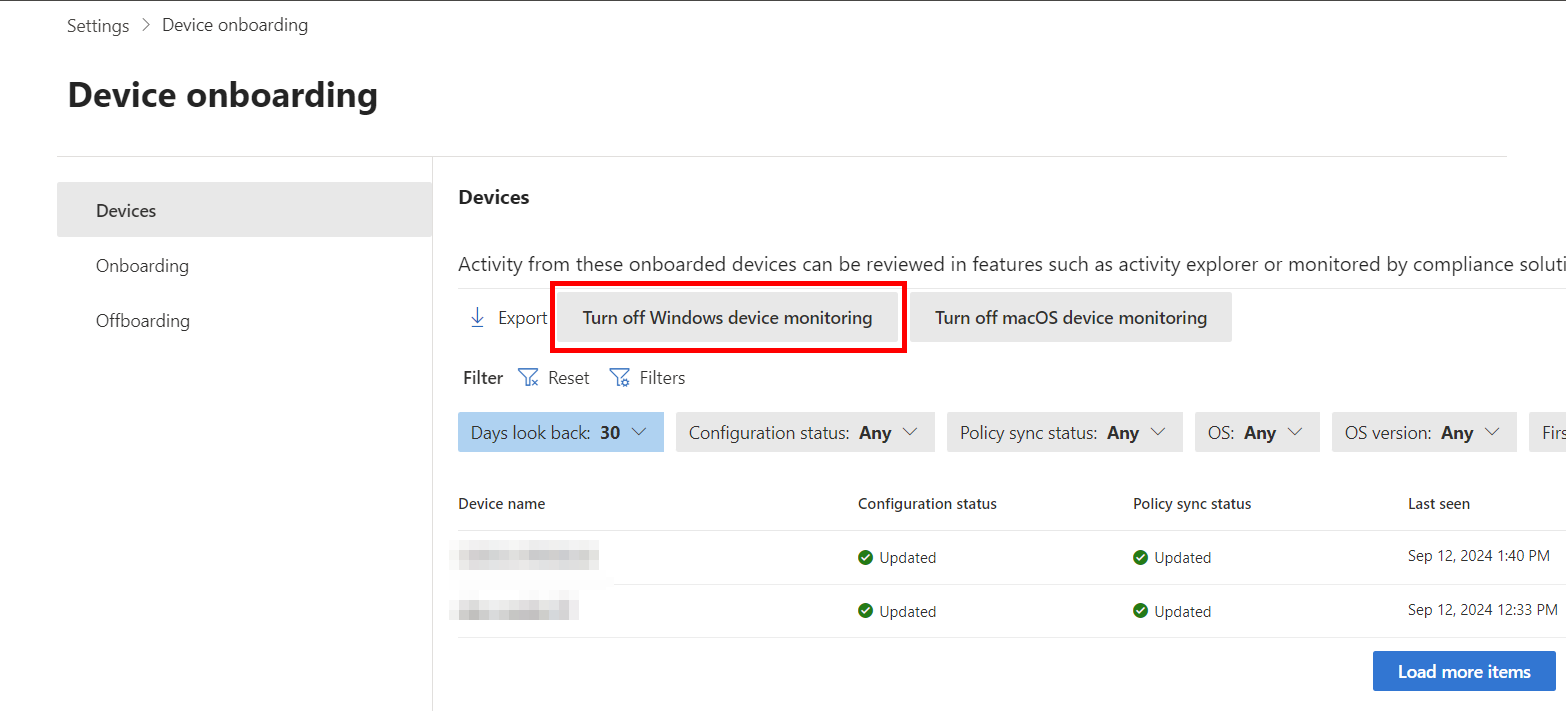

Onboard devices to Microsoft Purview – If your devices are already onboarded to Defender for Endpoint, you just need enable device monitoring within Purview. The below image will say “Turn on Windows device monitoring” https://compliance.microsoft.com/compliancesettings/deviceonboarding

Extend your insights for data discovery – This will prompt you to create two policies. One Insider Risk Policy and One DLP Policy. We will talk about these down below:

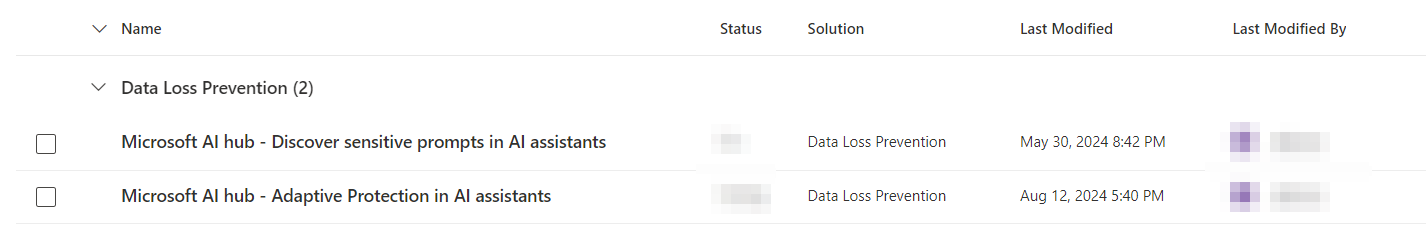

Data Loss Prevention (DLP)

Within the AI Hub, it will recommend creating two DLP policies. These two policies are centered around detecting sensitive information being pasted into other AI assistants and will alert on these activities occurring.

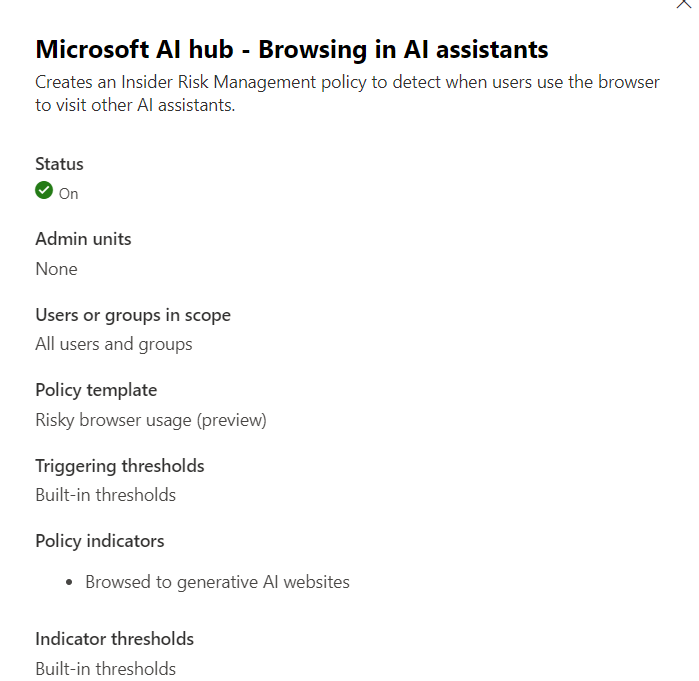

Insider Risk Management

The Insider Risk Management policy will increase the user’s risk inside of your tenant if they are browsing to AI assistants.

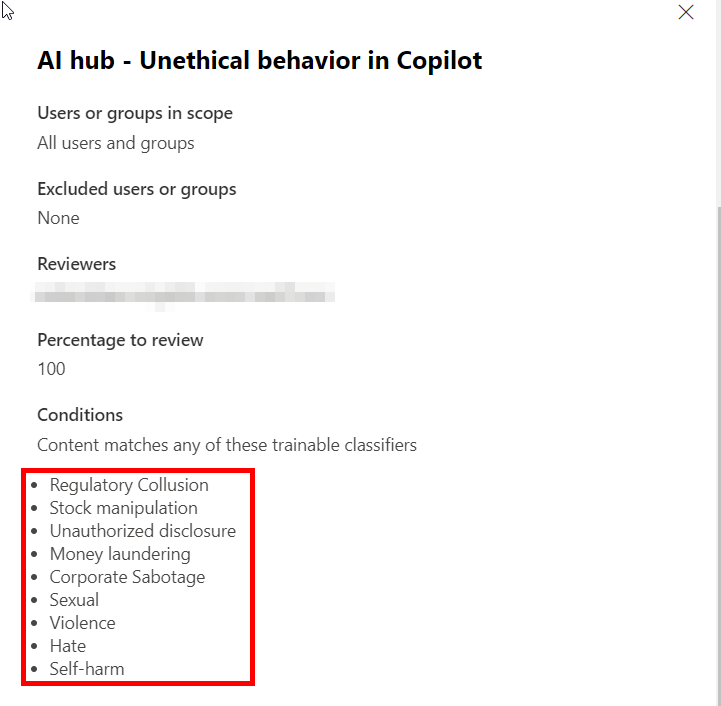

Communication Compliance

This policy will detect users who are using Copilot for bad purposes. The trainable classifiers will be in the following screenshot. It is up to your organization to determine what you would mark as “unethical” in terms of usage of Copilot.

Key Steps:

- Utilize the Microsoft Purview AI Hub by allowing it to build policies to analyze user adoption and behavior within Copilot.

- Determine applicable trainable classifiers within Communication Compliance to target Copilot interactions.

5. User Education and Awareness

At this point, we’ve got all the technical guard rails in place, we’ve got compliance and legal agreement, now we just need to let the users know how to use the tool. You may also check with your legal and compliance teams to discuss the potential for an “AI Acceptable Use Policy” similar to a mobile device acceptable use policy. This policy would let users know that they are allowed to use approved AI tools, but it can also be framed to protect the organization in the event that the tool uncovers sensitive information not meant for the user.

Training and adoption are key for usage of tools like this, otherwise these expensive licenses could be assigned to your users and go unused. It would be best to set up a train the trainer approach where several people get trained on the tools, then bring that knowledge back to their user bases and show real world applications of the tool. Something I’ve also seen work is a Copilot User Group that meets regularly to discuss what is working for them and what is not. These user group meetings bring a lot of diverse thought and usage to the user base licensed and enables more users to take more advantage of the tools at their disposal.

Key Steps:

- Create an AI Acceptable Use Policy within your organization which will block all AI usage until the use policy is signed.

- Create a training and adoption series based on the appropriate and successful usage of Microsoft Copilot

- Consider an optional internal Copilot User Group to discuss successes and failures with the tool.