As organizations integrate AI-driven tools like Microsoft Copilot into their workflows, security leaders must address a critical question: How do we protect AI access while ensuring users have a seamless experience?

One of the most effective ways to protect Microsoft Copilot is to use Microsoft Native tools like Entra Conditional Access. Conditional Access is the platform we use to already secure the other parts of Microsoft 365 and Azure, so we will use it to set the rules for accessing AI.

Now that AI is super popular with our end users, it is also popular with threat actors. Any good threat actor that takes over a mailbox as part of a business email compromise should be considering using Copilot or other integrated AI solutions to scan for sensitive information even faster. Threat actors are doing this, just not quite as quickly as I would have thought. This gives us the opportunity to layer in security before it becomes and even bigger problem. Let’s consider protecting our AI tools as much or more than the rest of our platforms. Specifically what we are going to focus on today is protecting Microsoft Copilot with Conditional Access by requiring phishing resistant MFA (to protect against instances of token theft).

Prerequisites

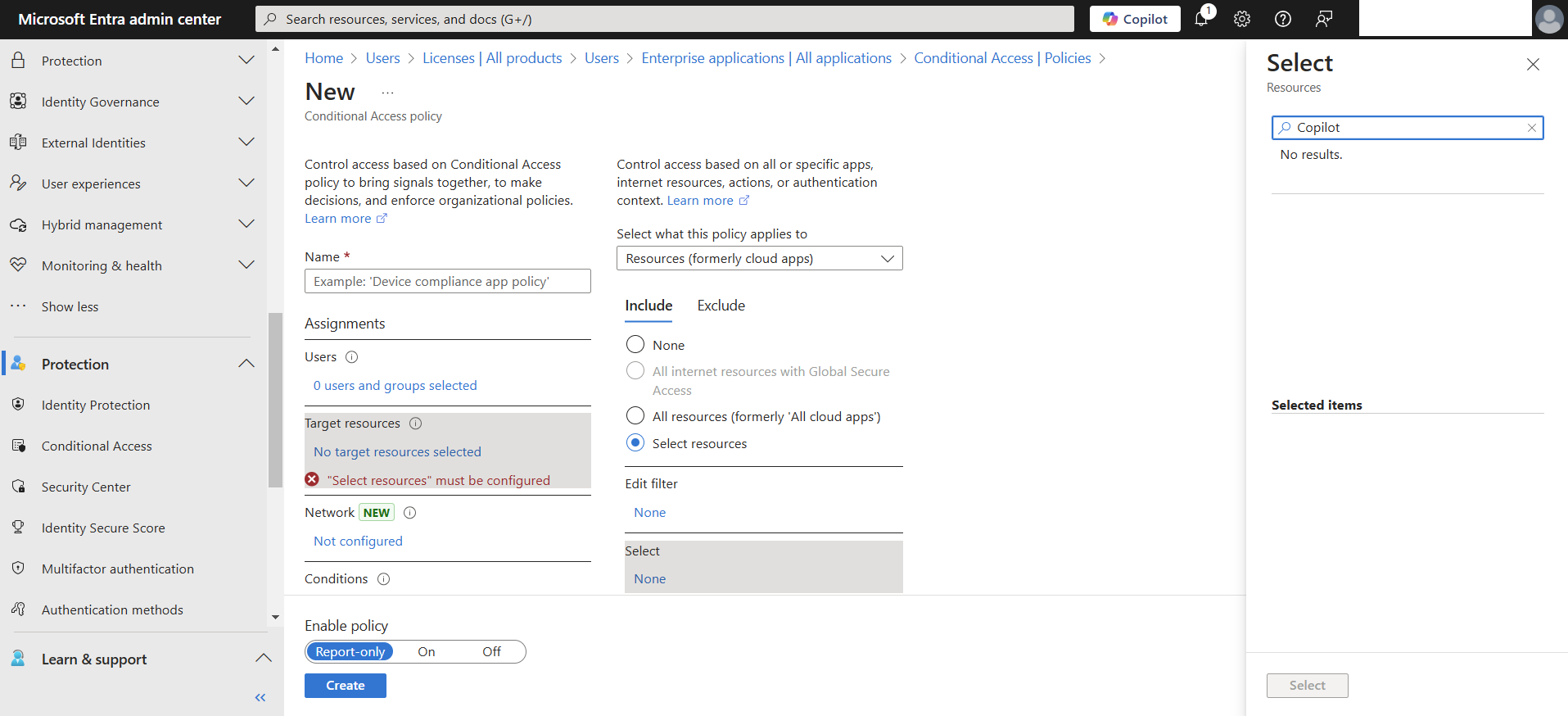

This should be pretty simple to protect Copilot with Conditional Access right? Wrong, of course there is some unneeded complexity, but that’s why you’re here / I will help you out. So, first of all when we look at our Conditional Access Policy, we may notice that there aren’t any enterprise applications available with Copilot in the name.

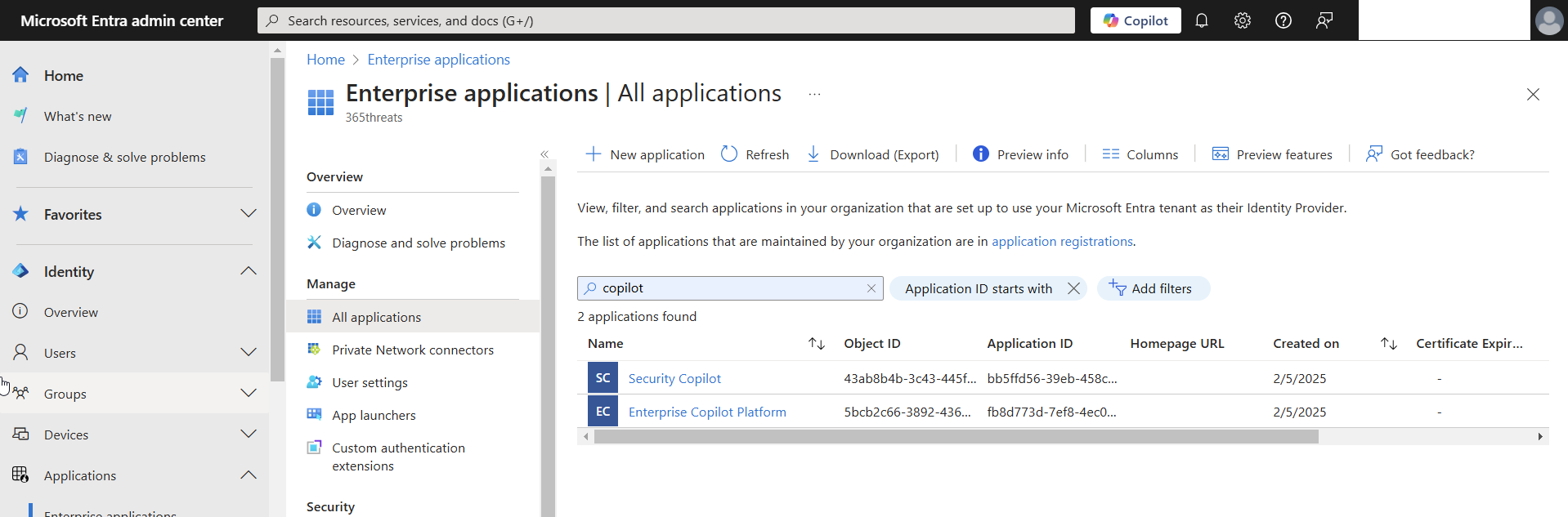

So now that we know that Copilot isn’t there, we recognize that the pre-work will require registering the enterprise apps related to Copilot and Security Copilot using some Microsoft documentation.

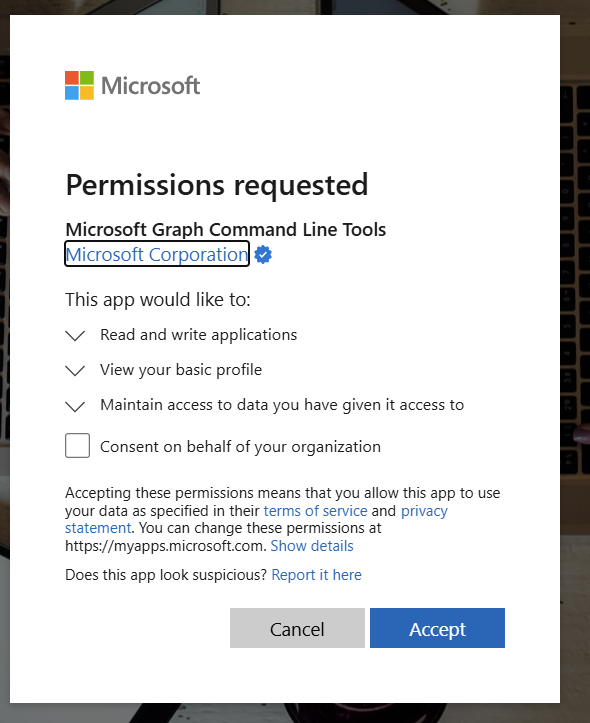

To create the service principals / Enterprise Apps, we will want to connect to the Microsoft Graph via Powershell using the following code:

# Connect with the appropriate scopes to create service principals

Connect-MgGraph -Scopes "Application.ReadWrite.All"

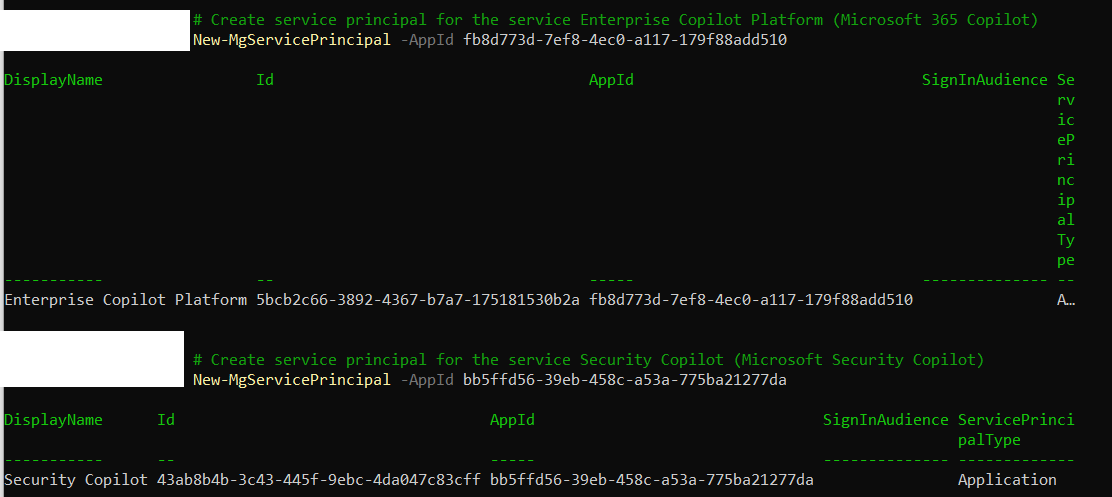

Next we will create two service principals, one for Enterprise Copilot Platform (Microsoft 365 Copilot) and one for Security Copilot (Microsoft Security Copilot).

# Create service principal for the service Enterprise Copilot Platform (Microsoft 365 Copilot)

New-MgServicePrincipal -AppId fb8d773d-7ef8-4ec0-a117-179f88add510

# Create service principal for the service Security Copilot (Microsoft Security Copilot)

New-MgServicePrincipal -AppId bb5ffd56-39eb-458c-a53a-775ba21277da

Apologies for the odd formatting in the PowerShell window, but you can see the service principals for both apps have been created in this tenant. Now if we go right into Conditional Access and try to create our policy we will see that we don’t actually have the apps yet. In my experience, it can take a few hours for these apps to show up. Sit tight, they will be there soon!

Configuration

Now that the apps are present and validated in our Enterprise Apps list, we can jump over to Conditional Access. It can also take some time for these apps to show up in Conditional Access!

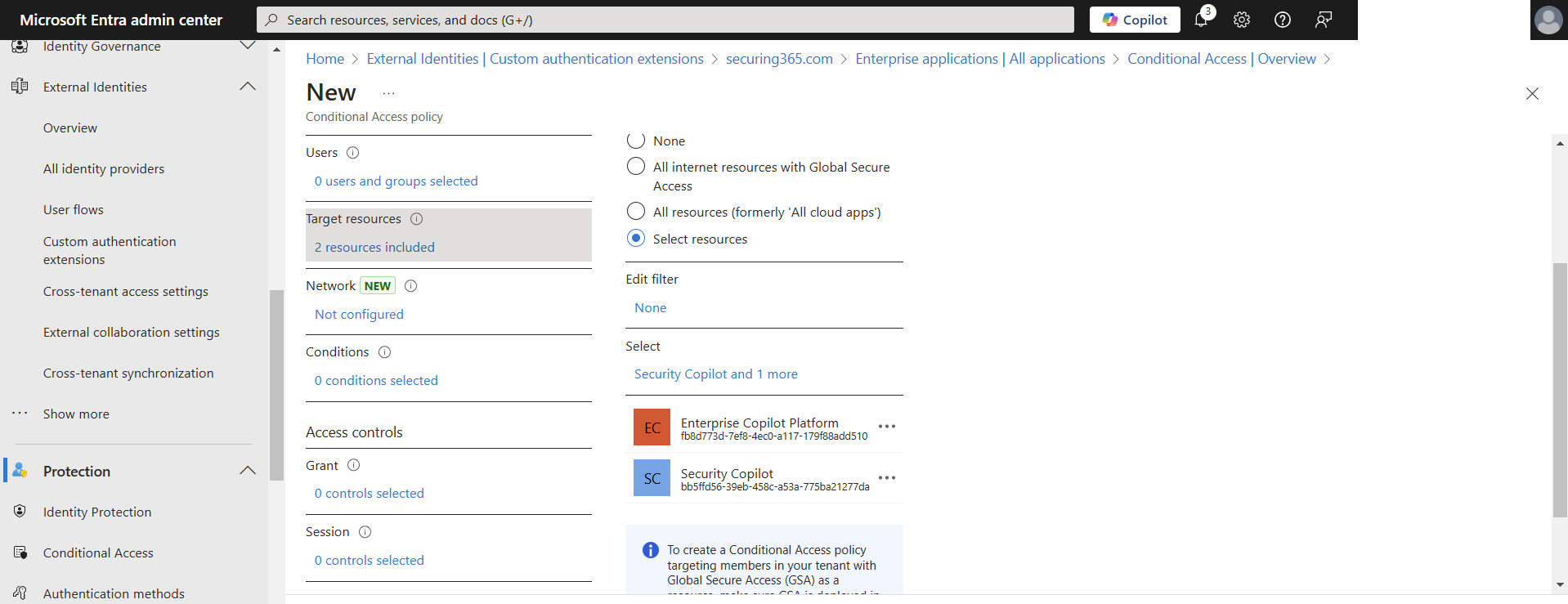

When we are going and creating our policy, I recommend searching for exactly the applications we are targeting. The two apps are: Security Copilot and Enterprise Copilot Platform.

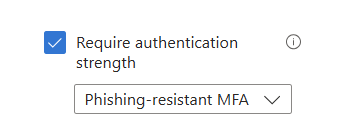

As for our grant controls, I recommend these are more strict than your policies covering all cloud apps because of the sensitivity of Microsoft Copilot or any generative AI tool your organization uses. In my example today, we are going to configure the policy to require phishing resistant MFA.

Just like anything I say here, please test these policies on test accounts and/or test devices before rolling into a pilot group or even production. This policy would impact users on Mobile Devices if rolled into production, so just make sure you are keeping those considerations in mind.

Validate

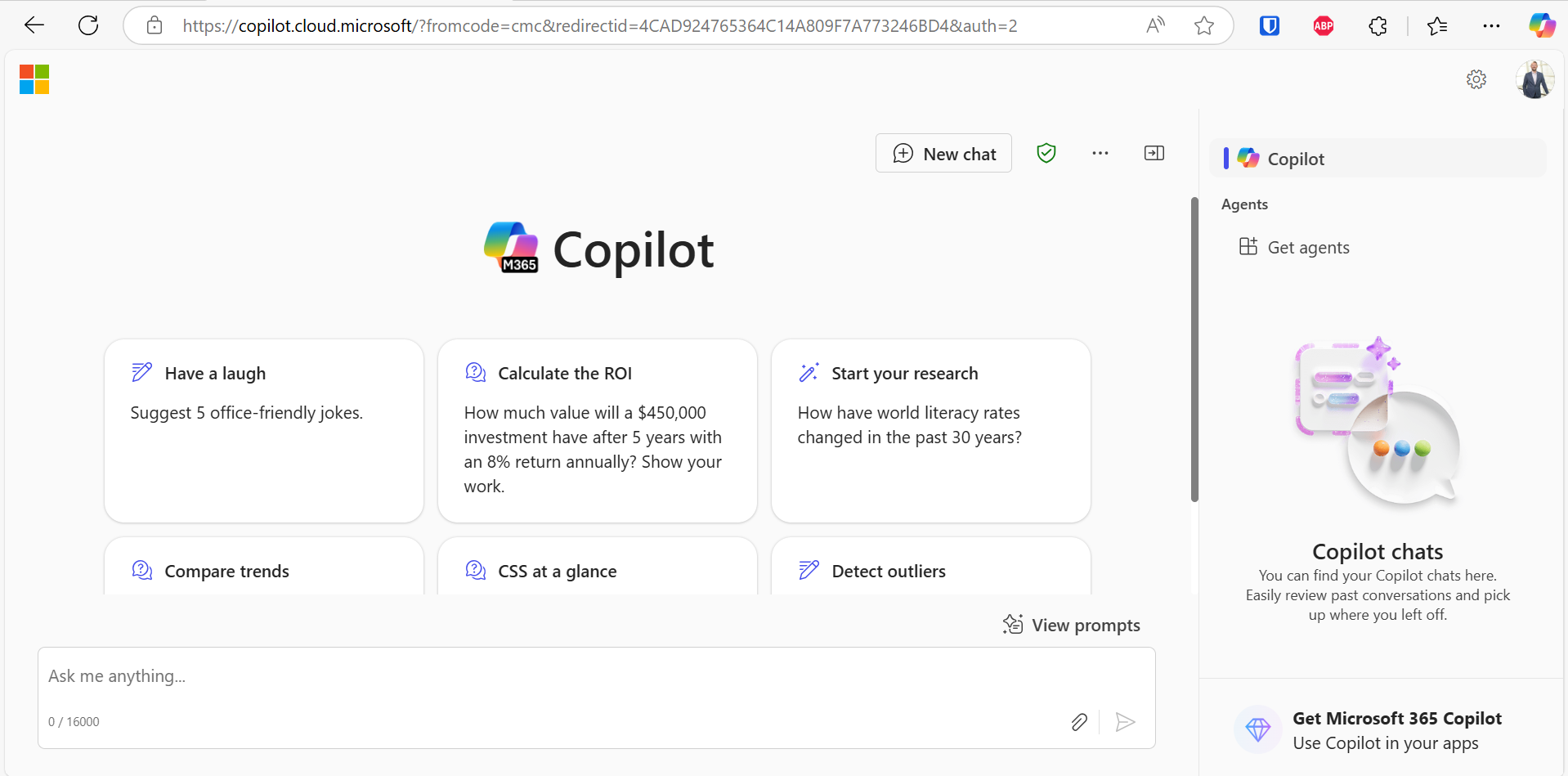

Now, let’s make sure our users can still get to Copilot when they are supposed to. The user below is signed into their workstation and have Windows Hello enabled and functioning properly as a 2FA method.

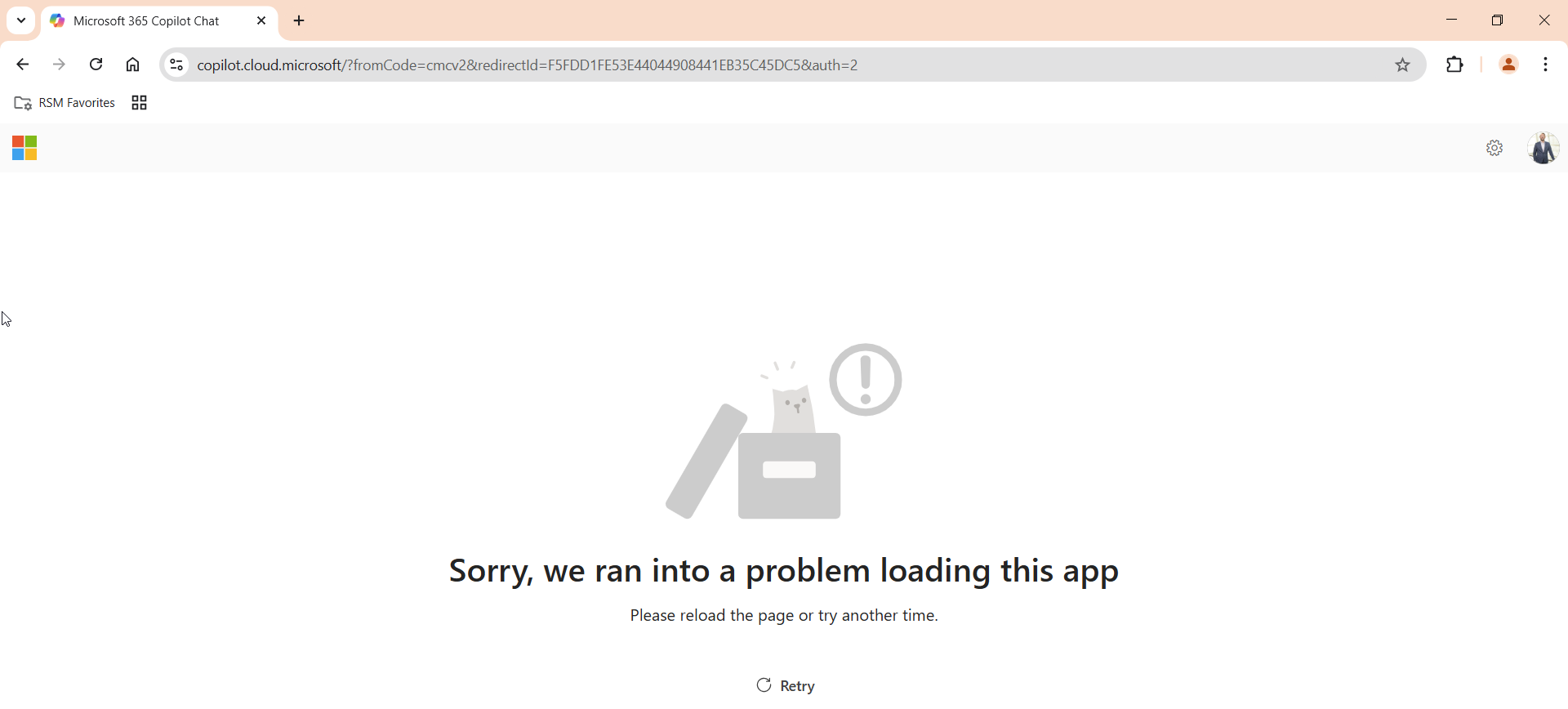

In this next instance, the user is coming from an untrusted device without Windows Hello and without a security key to authenticate, thus not meeting the phish resistant MFA requirement.

Now typically I would suggest looking at the Entra ID sign in logs to validate your end users are getting the desired experience and in this instance I would also be showing you the Entra ID logs showing our work is successful, but in this instance I cannot. At the time of the writing of this article, the Entra ID sign in logs show successful sign ins when they are in fact not successful, and they also lack showing the successful auth at all when it is successful. Now, this may be temporary, but I believe this is related to the Copilot app being embedded into the Office365 Homepage app. Ideally this will get cleared up with time, but as of now I cannot show the sign in logs to prove this is working properly. Again, in our end user testing it is working properly which is more important.

Conclusion

With all of our usage of Copilot and other AI apps, we have to also take responsibility for protecting them. They are not like any other enterprise app out there, we have to protect these even more. Take responsibility in your organization for requesting this change and requesting extra levels of security for more sensitive apps.

If you need assistance deploying these policies, or find yourself encountering other issues, please feel free to reach out!